|

Pandorabots Technology Deep Dive¶

Pandorabots seeks to improve man-machine communication. Toward this goal we apply technologies from the field of Artificial Intelligence. We offer a development and publishing platform supporting the creation and deployment of artificial entities capable of conversational interaction.

In pursuit of our man-machine communication goals we are truly agnostic. We will use anything that works - so much so, that we invite developers of the next great advance to contact us at info@pandorabots.com, and we will happily incorporate your technology into our platform.

Our platform supports many independent Machine Learning technologies. During the past 50 or so years, a variety of widely diverse Machine Learning technologies have emerged, each with the goal of supporting learning. Each has its own proponents and detractors.

How does Machine Learning Work?¶

Here is an explanation aimed at simply describing how the technology works. Our explanation is loosely based on the Artificial Intelligence Markup Language (“AIML”) one of the many diverse technologies (c.f. Classifier) found in the field of Artificial Intelligence. But for purposes our story, you won’t have to know any of these details. So let’s start with the story.

The Gist¶

Linguists and others point out that since we can potentially create an infinite number of sentences, how is it possible to create a computer program capable of handling conversational interactions?

Let’s start with any book - say Moby Dick - so we can have a real example in mind. Make a list (the “Moby Dick List”) of all of the words in the book - it will be a long list, but it won’t be as big as the dictionary. Now order the list (in descending order) by the number of times each word appears. So near the top of the list we will see words like: “the”, “of”, “and”, “a”, “to”, “in” and so on. Next to each word record how many times the word appears. So a fragment of our list might look like a bunch of pairs: (“the”, 19,815), (“and”, 7,91), and so on (try out some words for yourself at Moby Dick Word Frequencies).

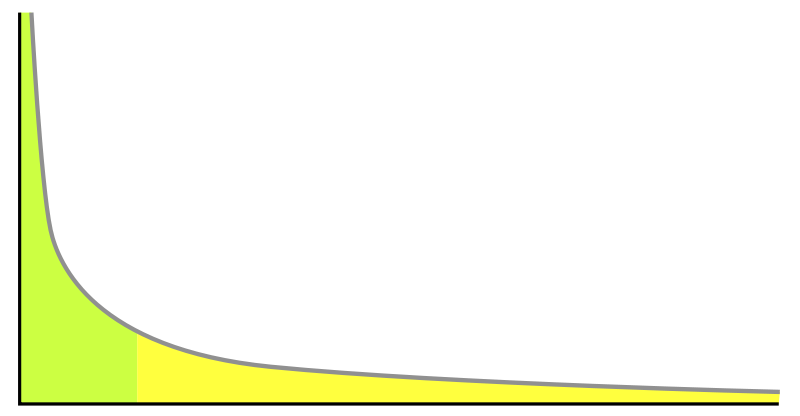

Next create a graph using these pairs - with the y-axis representing the number of times each word occurs and the x-axis representing each separate word in our Moby Dick List (in descending order). The actual graph for this particular book exists on the internet at Moby Dick and the Power Law and is less important than the general shape of the graph - which turns out to be similar for any book. So here’s the general shape (with thanks to Wikipedia and the Power Law):

To recap, there are only two things that are important here:

- The general shape of the graph is independent of the book we start with, and

- The shape of the graph suggests the data conforms to Zipf’s Law or sometimes known as the Power Law.

So What Does All This Mean?¶

In simple terms, there is a group of words in our Moby Dick List that appear often, and then a bunch of words that rarely appear. How is this helpful? Well, we started with one book - Moby Dick, but suppose we now restart the entire story with 100,000 books. And instead of considering individual words, we instead consider sentences. So we make a list of unique sentences and order them in descending order of occurrence. We graph these and again we get a similar curve. What this tells us is that we can expect to encounter a relatively small group of sentences that occur many times and a bunch sentences that rarely appear. And from this we can speculate that most of the inquiries directed at a software robot will have a similar distribution: a bunch that occur very often, and another collection that occur infrequently. So if we teach a software robot how to interact with the group that appears often then the software robot will immediately and quite often return acceptable answers. And over time we can create content for the infrequently encountered inquiries.

OK, so we have a nice story with a bunch of speculations. Is any of this really useful? YES! A very acceptable software robot can be created when the inquiries come from a local geographic area. How do we know this? Pandorabots has processed more that 2 billion interactions from around the world. Our data suggests that, in any geographic area, most people employ about 10,000 sentences routinely and reflexively. Yes, people are definitely capable of generating any number of sentences, but for the most part they are content to use those they already know, and infrequently add attractive new sentences they happen to encounter.

So What’s the Conclusion?¶

To produce an interesting and useful software robot one need only find the top 10,000 or so sentences (along with their variants) that people typically employ in a given geographical region. This is just a small Data Mining problem. A software robot taught to converse using these 10,000 sentences will be deemed good enough by the average person. So, suppose you know the top 10,000 sentences most frequently encountered. How long does it take to answer them? Assuming content creation averages about 1 minute per sentence, you need about one month of full time work. In actual practice you need about 3 times this amount for testing and quality control.

Why Do You Use AIML?¶

When we created the Pandorabots’ platform (in 2001) we started with AIML. We made this choice because AIML was widely used, an open standard, and simple to understand. For those with a technical background, AIML can be thought of as an Assembly Language. While easy to understand by non-technical people, programmers often yearn for higher-level tools to automatically generate AIML. We’ve built some of these tools, and more need to be built. As mentioned earlier we are agnostic toward the tools and so other Machine Learning approaches distinct from AIML are also available on Pandorabots platform.

What Are AIML’s Advantages Over Other Machine Learning Approaches?¶

AIML is simple for non-programmers to learn and use. Content can be incrementally acquired for a specific domain and over time becomes increasingly accurate. Content curation and maintenance is very easy. It is easy to implement an AIML interpreter and so creating content for small - non-internet connected devices is simple and straight forward. These devices need not be internet connected.

What Are AIML’s Disadvantages Over Other Machine Learning Approaches?¶

AIML 1.0 can be tedious to write and debug. AIML 2.0 addresses many of the shortcomings of AIML 1.0. While it is conceptually it is easy to develop visual debugging tools, no one has yet finished such a project.

What About Siri?¶

Before addressing Siri, please stop reading now and listen to a couple minute fragment of Simon Sinek’s Ted Talk beginning at 8:10 on the Wright Brothers. Sinek’s has a marvelous rendition of this story - one you may have already heard, but is directly relevant to this question. If you really want to skip the talk here’s the essence of the story:

Around the turn of the century (1900s) the US Government and public were enthralled by the possibility of winged flight. Samuel Pierpont Langley, a highly regarded scientist and senior official at the Smithsonian Institution - with his friends including some of the most powerful men in government and industry of the time - was provided a War Department grant to demonstrate winged flight. Langley assembled a team comprised of the best people of the time. And through serialized weekly NY Times articles and numerous media-related stories, the public avidly followed his progress.

A few hundred miles away, laboring away in obscurity in a bicycle shop, Wilbur and Orville Wright, both without high-school degrees - indeed - none of their helpers had advanced degrees either - demonstrated winged flight on December 17, 1903. It took months for the rest of the world to even learn this story. Moreover, presumably embarrassed government-related people sought to discredit the accomplishment by falsifying evidence suggesting that Langley had successfully demonstrated a flight-capable machine before the Wright brothers actual flight. Their efforts failed but only after a long tiresome fight.

Why is this story relevant? The Department of Defense and other government entities expended billions of dollars over the last 40 years developing Artificial Intelligence technology. In pursuit of these goals the best minds at the largest institutions were recruited and became happy recipients of these funds. Government entities have long sought the ability of reading and understanding natural language texts (books, magazines, email, etc). Although both the donors and recipients of these grants will object strenuously - very little of practical substance has come of these efforts. Both Government Program Managers and Researchers working on natural language processing software - are quite careful to say “it does not work yet but we are cautiously optimistic we can make it work in the near future”. And huge money continues to flow, year after year. Siri came out of such a government project.

It is interesting to note that none of the software robots funded by US Government money have ever participated in the annual software robot contests. Why not? Such entrants will be demolished by the software robots developed by so-called amateurs. Siri can’t even make it to the judging rounds of any of the annual contests. The Program Managers and Researchers prefer obscurity to negative publicity. We could imagine lame excuses like: “We won’t enter our Ferrari against your VW Beetle”.

How Does a Pandorabot Differ From SIRI?¶

Low-level Technical Details¶

At a low-level, AIML is used to specify patterns (of natural language) that trigger responses. This technology is known as a Classifier. Siri‘s technology also falls into the Classifier bucket, though it uses statistical approaches rather than pattern matching.

AIML-based pandorabots are developed using Supervised Learning techniques. It is unknown what Siri uses.

Practical Differences¶

Pandorabots may reside locally on a device, and continue to work in the absence of an internet connection. Pandorabots can learn and retain intimate details about users.

As a developer it is quite easy to develop domain-specific pandorabots. Spatial and time context is important, and can be incorporated into the content. Developers can easily incorporate external-acquired data mashups via service APIs into the content. Pandorabots also connect into Semantic Databases.

Pandorabots are immediately multi-lingual - so they will work in any environment. Pandorabots currently uses Google speech APIs to recognize speech, but other speech models can easily be incorporated.

Siri must be connected to the internet. Siri’s data is completely controlled by Apple. Siri works in limited foreign domains. Siri uses Nuance for speech recognition.

Last update:

Feb 15, 2014